In the second half of our remote sensing class we have more or less moved on from simple image interpretation and are now moving onto ways in which we can alter or combine image(s) in order to produce views of a given area that are better suited for interpretation. The specific tasks we learned in this lab were: creating subsets, conducting image fusion, radiometric enhancement techniques, linking images to Google earth, and resampling.

Subsets

their are two ways to create a subset. one way is by using an inquire box, and the other way is done by using an area of interest shape file. an inquire box works well if you are just looking at geographical area of interest, but say you area of interest is not a complete square, like a county. under such a circumstance one would use an area of interest shaper file,

Above is an image created by an inquire box subset.

above is a subset created by using a shapefile of the area of interest.

Image Fusion

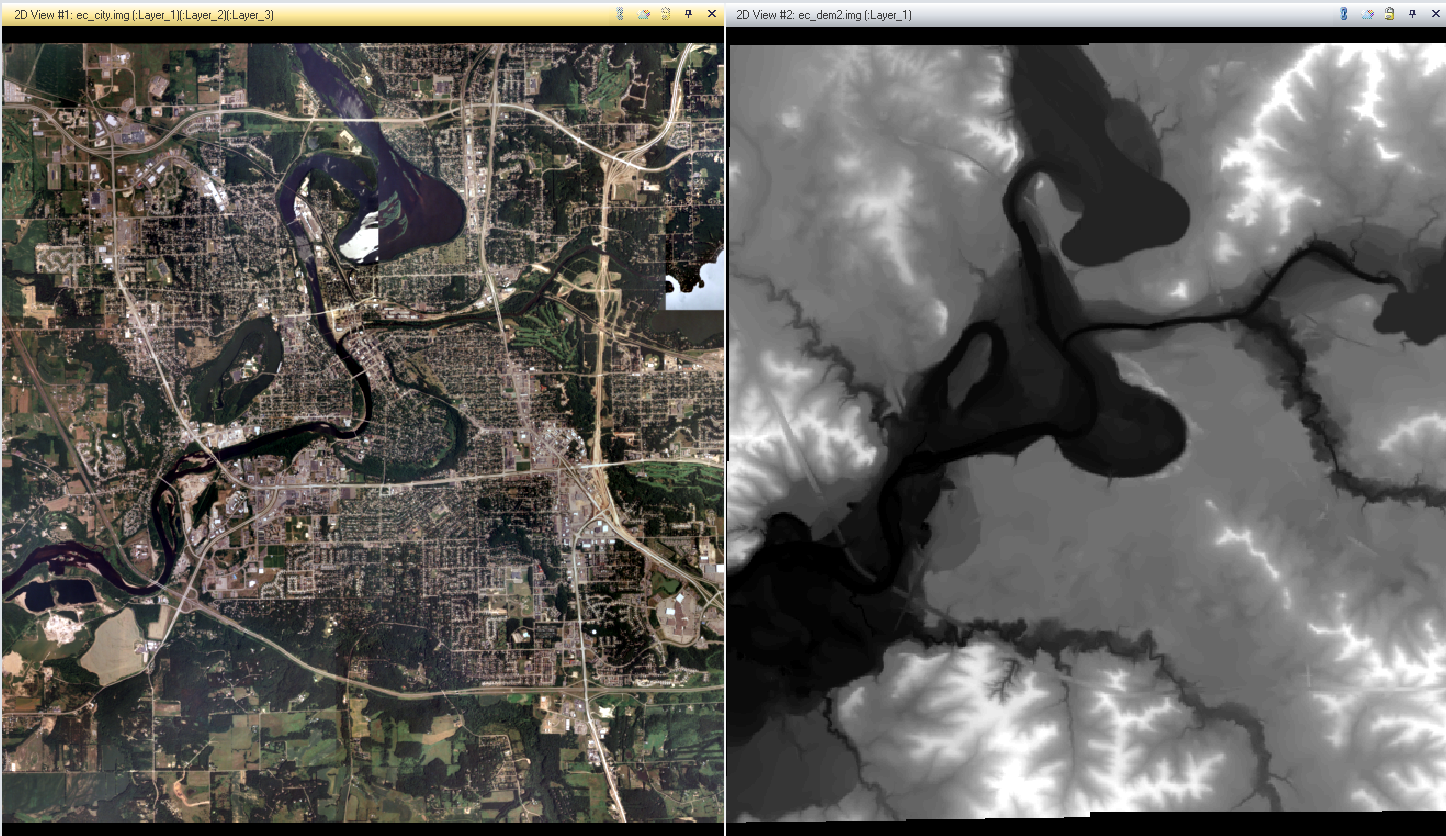

image fusion is an operation where one or more images are combined to create an image better suited for visual interpretation. in this instance, I conducted a

pan sharpen procedure, where by combining a course yet colored image with a panchromatic black and whit image. the resulting image is one with a high spatial resolution that also has color.

the image on the left is the original reflective image. the one on the right being that image fused with a panchromatic image of the same area.

Radiometric Enhancement Techniques

Radiometric enhancement techniques are used in operations to enhance an images spectral and radiometric quality. In this case, the task at hand was

haze reduction. haze can cause an image to become distorted so that the features below said haze are harder to label and distinguish.

the bottom image is distorted by haze. the top picture is the resulting picture after I conducted the radiometric technique of enhancement known as haze reduction.

Linking Image Viewer to Google Earth

perhaps one of the most useful tools on ERDAS IMAGINE is the ability to sink the view satellite image you have to the same location and scale as a Google earth. This provides a great selective interpretation key that allows you to see what certain features are via text and symbols. by sinking the views, as you scroll/zoom around on ERDAS, one can see various features on the Google maps being displayed via symbols or text.

Resampling

Resampling is the process of changing the pixel size of given image. In resampling, pixel sizes can be enlarged or shrank. In this instance, I used two different to procedures to reduce pixel sizes.

The first method I uses is call Nearest Neighbor. This procedure causes pixels to match the values of the one closest to them. this is the simplest method, but can cause 'stair-stepping' where pixels appear to overlap and cause an overall rougher image in comparison to the original.

The second resampling method I used is bilinear interpolation. In this method pixels within a 4x4 area are averaged out to create a newer smaller pixels. the resulting image is one that is smoother than the original image, but will have distortion in contrast due to the averaging of the pixel values.

Top left: original image

Top right: Nearest Neighbor resampling image, visible roughness due to overlapping

Bottom left: Bilinear Interpolation resampling method, smoother image but less contrast between colors

.PNG)